Meet the World’s Most Advanced AI Glasses: Meta Ray-Ban Display

The intersection of fashion, optics, and artificial intelligence has long teased futurists, techies, and trendsetters alike. With the arrival of the Meta Ray-Ban Display, that intersection has shifted from speculation to reality. These AI glasses promise to reshape how we interact with the world: delivering notifications, immersive visuals, and real-time information—all layered seamlessly over everyday life. Whether walking down a city street, attending a meeting, or navigating public transit, the Meta Ray-Ban Display aims to keep users connected without pulling out a phone.

Design meets substance in these frames. Ray-Ban’s iconic style will remain visible: classic shapes, premium materials, and the familiar details that have made the brand beloved for decades. Meta’s technology, silently integrated, delivers heads-up displays, voice assistance, gesture control, and always-on connectivity. Under the hood, powerful chips enable low latency, efficient power use, smart sensors, and AI algorithms to interpret the environment. Smart cameras, motion sensors, and spatial audio help make these glasses more than wearable fashion—they become mobile computing devices.

Battery life, display clarity, and comfort have been among the biggest challenges in wearable tech. The Meta Ray-Ban Display addresses these with optic-grade lenses, energy-efficient microOLED or AR display panels, and lightweight frame architecture. Weight distribution has been optimized so long wear does not lead to discomfort. Heat management components are built in quietly. Morning to evening usage expectations are being met without frequent recharging. These are essential improvements for users who demand performance without compromise.

Privacy and data security are areas where scrutiny has been high. Meta insists that on-device processing handles sensitive tasks. Visual data is processed locally; user consent is required for any cloud interaction. Facial recognition features can be disabled. Secure modes are included for public spaces. Transparency reports will track app permissions, storage of visual recordings, and third-party access. Such protections are required if AI glasses are to earn trust.

What Sets Meta Ray-Ban Display Apart

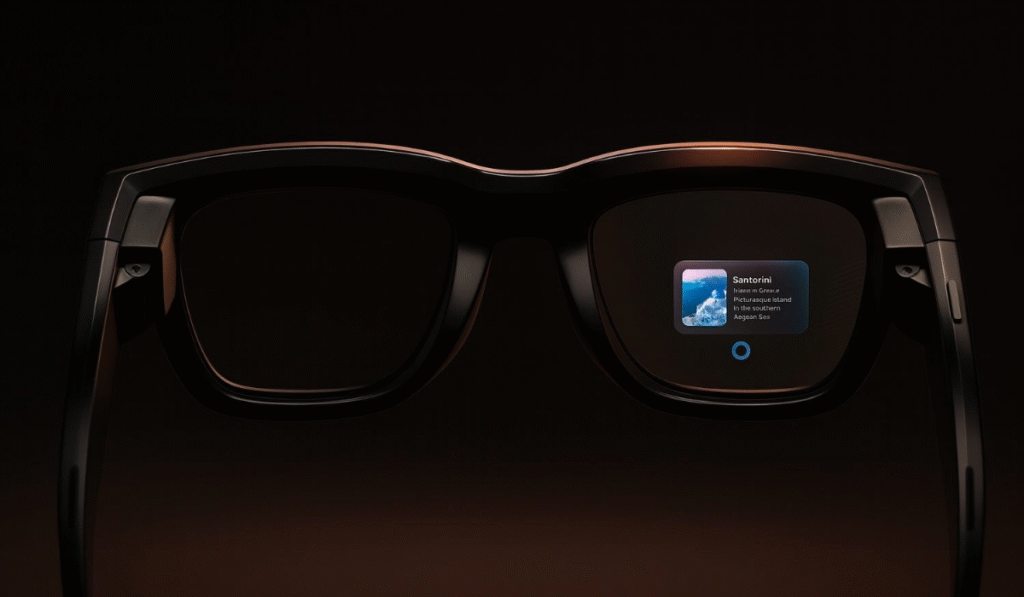

When comparing Meta Ray-Ban Display to earlier smart glasses, many improvements stand out. First, the display technology pushed to new limits. High-resolution visuals—sharp, bright images with excellent contrast—are projected through microOLED or equivalent AR panels. Colors appear vivid even in bright sunlight. Head-up content overlays real life with minimal lag. Gesture recognition enables intuitive control: a wave, a tap on the frame, or voice command suffices to accept a call, adjust brightness, or switch apps. Second, the hardware design has been refined. The frames are crafted from lightweight metals or composite materials. The lenses are comfortable and scratch-resistant, UV-protected, and, in some models, polarized. Battery cells have been redesigned to maximize lifespan; charging is made faster and more convenient using magnetic docks or USB-C connectors. Heat dissipates via hidden vents so skin contact remains comfortable across long sessions. Third, smart software makes the experience seamless.

AI algorithms detect context automatically: notifications are muted when in a meeting; visual prompts are dimmed indoors; spatial audio adjusts when traffic is nearby. Voice assistants are responsive, with natural-language understanding that can process multi-step commands. AI vision helps identify objects, translate text in real time, and provide navigation overlays. Finally, social integration is deep. Apps are tailored for everyday tasks—camera functionality, media playback, communication, mapping, and information retrieval. Content creators will find value in easy capture and sharing; enterprise users will see productivity tools. These combined enhancements push the Meta Ray-Ban Display far ahead of earlier smart glasses, not just as prototypes, but as consumer-ready, stylish devices that amplify human capabilities.

Uses and Daily Scenarios

Imagine walking through a dense urban area. Notifications from messages appear discreetly in your peripheral vision, not blocking your view. Street signs are translated instantly. A voice command summons your biometric fitness stats. Media playback begins precisely at your command. Or picture attending an outdoor concert: earphones are replaced with spatial audio emanating from speakers in the frame, letting music flow without isolating you completely. During a workday, you glance down at subliminal prompts to bring up calendar events or reminders; document reviews or map overlays can be projected as you walk. All this happens with minimal distraction, ensuring safety and awareness remain front and center.

Students, educators, content creators, and developers gain special utility. Lecturers might stream visuals into classrooms; students could capture experiments hands-free; creators record vlogs, timelapse, or travel content without lugging bulky gear. Developers can build applications that leverage AI vision, AR overlays, and context-aware tools. Health and fitness enthusiasts may benefit from posture coaching, environmental alerts, or voice-based performance feedback. For elder users or those with mobility limitations, object detection, obstacle warnings, or voice navigation can increase independence.

Challenges and Considerations

Despite its promise, certain challenges persist. Battery capacity always competes with size and weight. Display brightness must scale for outdoor use without draining power. Privacy remains a constant concern. Any smart camera raises questions about consent in public. Comfort over long wear periods has to be tested across diverse head shapes. Durability—resistance to sweat, temperature extremes, water ingress—is essential. Price will be a barrier for many early adopters. Compatibility with existing apps and ecosystems might limit usefulness initially. Lastly, software updates must be frequent to patch bugs, improve AI features, and ensure security.

What the Launch Means for the Wearables Market

The introduction of Meta Ray-Ban Display signals a turning point. Wearable technology has moved beyond smart-watches and fitness bands. Devices that overlay computation and perception into daily life now seem viable. Competing firms like Apple, Google, Snap, and Bose will feel pressure to accelerate their own AI glasses roadmaps. Prices are expected to drop over time. As more accessories, apps, and use cases emerge, the ecosystem will grow richer. Regulations around data, privacy, and public safety will likely adapt. Standards for optical clarity, display safety (e.g. blue light, eye strain), and wireless protocol interoperability may be defined more strictly.

Final Thoughts

Meta Ray-Ban Display is not just another gadget—it represents the convergence of AI, fashion, and augmented reality. It pushes the envelope in design, utility, and user experience. For those who want to stay ahead of the curve, these glasses offer a glimpse into the future of computing. As hardware improves, software matures, and ecosystems expand, the promise of AI glasses will become more tangible for everyday users. Watching this space will reveal what truly changes how people see the world—literally and figuratively.